It has a compact tower case with chimney-style cooling. There isn't space on the

inside for expansion slots. It has custom-made graphics hardware because the

interior is so cramped. It tends to get hot. It's easy to work on because the

case comes right off, but there's no point because

all the components are proprietary. And that's the Apple G4 Cube.

But today we aren't going to look at the Cube. No sir. We aren't going to look at the Cube. I fooled you. I fooled you. There will be no

matches for Mikey. Instead we're going to have a look at the

2013 Mac Pro, which was introduced in 2013 and discontinued six years

later. Not a bad run given that it made people so much frumple. It came from

heavy space, but really it was fingers.

The 2013 Pro was controversial. Professional Macintosh users weren't keen on the lack of

internal expandability. PC owners such as myself ignored it, for essentially the same reasons that turned us off the Cube many years ago. As time went on a rash of graphics card

failures and a reputation for unreliability did nothing to improve its image. It depreciated like mad on the used market. Apple didn't release a second generation, so the original line-up of Ivy Bridge Xeons remained on sale unchanged for six years, by which time they were five generations behind the curve.

The dual graphics cards. One of them also houses the computer's SSD, which strikes me as a bad idea.

Do you remember the 17" CRT Apple Studio Display? It was huge,

power-hungry, prone to failure, and it was replaced almost immediately by a

range of LCD monitors. But it lived on in the minds of others because it

looked awesome. It still looks awesome.

There was a time when Apple set the pace, design-wise. The translucent plastic iMac inspired a wave of cheap imitations. In the mid-2000s Apple's clean white aesthetic was used in numerous Hollywood films as a visual shorthand for modern design. The aluminium-bodied MacBooks were copied extensively by other manufacturers, albeit that the copies used cheap silver-painted plastic instead.

I have the impression that Apple wanted the 2013 Mac Pro to be a

modern style icon as well, but it didn't take off in the popular

consciousness. It's cute, but it resembles a rubbish bin, and beyond a couple

of novelty PC cases no-one copied it. There's a fine line between stylish minimalism and "this is just a featureless cylinder".

That's what the 17" Apple Studio Display looked like. On the cover of that book there. I took that photograph

back in 2015

for this post here. Very few technical gadgets make me feel sad. Imagine being a designer or a

musician, at the top of your game, living in London in the late 1990s, with a

Power Mac G4 and a 17" CRT monitor. The world is at peace, war is over, and

the economy is doing great. You have been asked to make up a series of graphic

ideas for Radiohead's new project. You get to meet the band, who hang out in

your studio. They want to package the album in silver foil. What a time to be

alive. You go to parties regularly. You have a sideline as a DJ.

But the work dries up, and a few years later you are reduced to pasting text

over a photograph of Lisa Maffia because no-one buys records any more, and

then you get a job for the local council overseeing housing because Will Young's record company is not

interested in cutting-edge graphic design, and in any case you don't know what

the cutting edge is any more. Perhaps there isn't one. Your 17" Apple Studio

Display is long-gone. It broke, so you put it in a skip. Radiohead don't remember you. Your former studio space

was demolished in 2013 and is now an anonymous block of flats. When you talk to your co-workers about postmodern visual design they have no idea what you're talking about.

That's what I think of when I think of the 17" Apple Studio Display. None of

those things happened to me, but that's what I think of when I think of that monitor.

The 2013 Mac Pro depreciated like mad. I've said that before. It depreciated

etc. As of 2022 it's an interesting proposition on the used market. The

low-end models actually sell for less money than the later, i7-powered Mac

minis, but they have much better graphics hardware and more ports. They're

harder to send through the post, but not as hard as a full-sized 2006-2013 Mac

Pro. The case seems purpose-designed to fit into a backpack, and its a shame

it was no good at games, because it would have been an awesome LAN party

machine.

As a high-end graphics workstation the 2013 Mac Pro was an iffy proposition

even when it was new, but as a cheap and possibly disposable internet surfing

/ general media machine that doesn't take up much space it's a much better

idea, so after I found one cheaply on eBay I decided to push my fingers through heavy space and enjoy the sauce.

The border around the ports lights up when you move the chassis.

The Application of Lavender

Whether you love or merely adore Apple you have to admit that the company is

never boring. But if you're a professional that's not necessarily a good

thing. Professionals have a workflow that works and they want to keep it. They

don't have time to mess around. The 2013 Mac Pro was a bold departure, almost

an experiment, but it rubbed people up the wrong way and had some major

problems.

Historically the very first professional Macintosh workstation was the

Macintosh II of 1987. It was the first Macintosh with multiple

expansion slots and a separate case, keyboard, and screen. The earlier

Macintoshes all used the original classic all-in-one design.

From that point onwards Apple always sold at least one big tower case with

masses of expansion slots and space for extra memory. Initially the machines

had a bewildering range of number-based names. Do you remember the Power

Macintosh 9515/132? Neither do I. But coincident with Steve Jobs' return the

company standardised on G3, G4, G5 etc. Except that there

was no etc, because the G5 was the last of the PowerPC Macintoshes.

The Power Mac G5 was launched in 2003. It introduced a huge aluminium

case that still looks good today. I have one:

A few years later Apple switched from PowerPC to Intel, and in 2006 they

launched the intel-powered Mac Pro, which used essentially the

same case, but with more internal space for expansion because the processors

didn't need the enormous heatsinks and fans pictured above. Why do I keep switching

between Macintosh and Mac? I'm old-school. I can remember when they were

Macintoshes. At some point in the early 2000s they became Macs. But in my

heart they will always be Macintoshes.

The 2006-2013 Mac Pro had:

- four hard drive slots, of which one had the boot drive

- two spaces for optical drives, one of which had a DVD drive

- four PCIe slots, of which one had the graphics card

- eight RAM slots, of which typically six were already occupied

- five USB ports and four FireWire 800 ports

- two Ethernet ports

- optical digital audio input/output and conventional analogue 3.5" audio

jacks

The two optical drive bays could also be used for extra hard drives. The USB ports were USB 2.0, but you could add USB 3.0 with a PCIe card, if you

could find the right card. The audio inputs were a nice touch although I have

the impression most users had a professional audio interface instead.

This generation of Mac Pro has aged well, and some people still use the later

models. The very last 2006-2013 Mac Pro had twelve cores running at 3.06ghz,

with space for 128gb of memory, and it supported a wide range of graphics

cards, albeit that standard PC cards had to be modified to work. But it wasn't

a particularly difficult task.

Apple kept the 2006-2013 Mac Pro up-to-date, but the company rarely talked

about it. In the 1980s Apple was a computer company whose core markets were

education and "the rest of us" provided we had a lot of money. In 2006 Apple

had the iPod and iTunes, but it was essentially still a computer company.

By 2013 however everything had changed. Apple was a mobile phone and tablet

giant with a range of posh laptops. It still sold desktop computers, but they

were not its main focus. I mention this because part of the negative reception

of the 2013 Mac Pro came from a perception that it was a glorified Mac mini,

and that Apple had given up on power users.

If it had just one PCIe slot it wouldn't have been so bad, but it had none at

all, as if Apple wanted to make it clear that PCIe was beneath them. Apple's

my-way-or-the-dual-carriageway attitude was not universally admired.

I'll describe the 2013 Mac Pro. It was built around two Big Ideas. Firstly it

had no internal expansion slots at all. None. That was the first Big Idea. The

2013 Mac Pro had:

- one non-standard M.2-style SSD slot, which was already filled with the boot drive

- no spaces for optical drives

- no PCIe slots

- four RAM slots, of which three were already filled

- four USB 3.0 ports, plus six ThunderBolt 2 ports

- two Ethernet ports

- a combined optical/analogue 3.5" audio output jack plus a separate headphone

socket

I mean, yes, it had RAM slots and an SSD slot but they were already filled up.

You could replace or upgrade the components, but the only thing you could

add was a pair of RAM sticks. Could you swap the non-SSD graphics

card with an SSD graphics card and have two SSDs?

No, you could not.

The Big Idea was that owners would use the USB and ThunderBolt ports to plug in external hard

drives and PCIe enclosures instead of putting everything inside the case. This was possible because USB 3.0 and ThunderBolt 2 were much faster than their predecessors. The jump from USB 2.0 to USB 3.0 was roughly tenfold, with USB 3.0 transferring data at almost half a gigabyte a second. ThunderBolt 2 was even faster. Suddenly it was practical to use an external hard drive or SSD as if it was an internally-mounted boot drive. I have the impression that Apple's engineers became overwhelmed with the new world of high-speed external ports and decided that internal slots were passé.

The machine also did away with FireWire, although there was an optional

ThunderBolt-FireWire adapter. Apparently the headphone socket supported

headset microphones, but not line in.

The memory. Apparently the black coating isn't just for show, it also

acts as a heatsink. The same is true of the SSD just visible on the left.

The emphasis on external expansion wasn't necessarily a bad idea. Laptop

owners were used to it, ditto fans of the Mac mini. But a lot of Mac Pro

owners were musicians who had old PCIe audio interfaces. Or they were graphic designers who had old scanners or high-resolution printers that used special

PCIe cards. Or perhaps they liked a particular graphics card and didn't want

to use the cards that Apple shipped with the Mac Pro.

Some users were unhappy with the extra cabling involved with external hard

drive enclosures. Some users simply wanted more than four USB ports without

having to mess around with an external USB hub. In theory the six ThunderBolt

2 ports should have compensated for the relative lack of USB sockets. It's

Thunderbolt, isn't it? Just the word Thunderbolt. Six Thunderbolt

ports. In theory the six Thunderbolt 2 ports etc.

But this was 2013 and Thunderbolt was new, and there weren't many Thunderbolt

peripherals. Even a short Thunderbolt cable from Apple cost around £30, and

because it was an unpopular standard there weren't any cheap eBay knock-offs.

There still aren't many cheaper options today.

Thunderbolt 2 was superseded by Thunderbolt 3 in 2015. Apple didn't

upgrade the 2013 Mac Pro's ports, and in the absence of a built-in PCIe slot there's no way

to upgrade the 2013 Mac Pro to use Thunderbolt 3 peripherals at Thunderbolt

3 speeds. You can't use the relatively new 6K Apple Pro Display with a 2013 Mac Pro, for example, because it uses Thunderbolt 3. On the other hand the jump from Thunderbolt 2 to Thunderbolt 3 isn't huge, and there aren't many Thunderbolt 3-only peripherals.

All the way throughout the 2000s I never used Firewire. It was an Apple

thing. I never used it until years after it had ceased to be a thing. I

can however say that I used Thunderbolt while it was still alive

(pictured).

The 2013 Mac Pro's second Big Idea was dual graphics cards, custom units

supplied by AMD. One card ran the monitors, the second was held in reserve for

general computing tasks such as video encoding and insert second example here

I'm not a scientist. It strikes me that if OS X had been able to use the

second card transparently it would have been a nifty idea. We're all used to

transparent GPU acceleration of the desktop, why not transparent acceleration of e.g. media playback or file compression?

Many years ago some of the higher-end Macintosh Quadras had a DSP chip. It

sounded great on paper, but only a handful of applications supported it, so ultimately it was a big waste. Imagine if the

DSP functionality had been baked into System 7 or MacOS 8.0, so that

applications didn't have to explicitly support the DSP. Imagine if the

operating system redirected audio-visual calls to the DSP without the

application having to know about it. Wouldn't that have been great? Apple fans needed something to boast about back then. It would have made them happy. But it was not to

be, and so the Quadra 840AV and its siblings became historical footnotes.

Sadly the 2013 Mac Pro's dual-GPU architecture had a similar problem.

Applications had to be specially written to make use of it, but beyond Apple's

own Final Cut not much made use of the second card. Even Final Cut itself ran

most of its tasks on the CPU. Games couldn't use it.

Has that changed since 2013? Has a software patch fixed things so that MacOS can transparently use a spare GPU as a processing unit? No. The problem is that the 2013 Mac Pro was a

one-off. Apple hasn't repeated the dual-GPU experiment since. The iMac Pro

and subsequent Mac Pro used a single powerful graphics card and the Apple

Silicon Macs have an on-chip GPU. Apple does sell a configuration of the

modern Mac Pro with twin GPUs, but that's just for extra monitor support, not

extra computing power; Apple also sells an Afterburner PCIe media accelerator

card, but it's an option, and not very popular.

As such the dual-GPU power of

the 2013 Power Mac had limited support and is unlikely to become more relevant

in the future. In fact an awful lot of enthusiasts eventually bypassed the built-in graphics cards entirely and used Thunderbolt eGPU

boxes instead.

Ironically Microsoft Windows running on the Mac Pro with Bootcamp can gang the two cards together and use

them as a single super-card - read on - so Mac Pro users could get

better performance from their machine in games by running Windows

instead. That wasn't a great advert for OS X.

Was it OS X in 2013? When did it become MacOS?

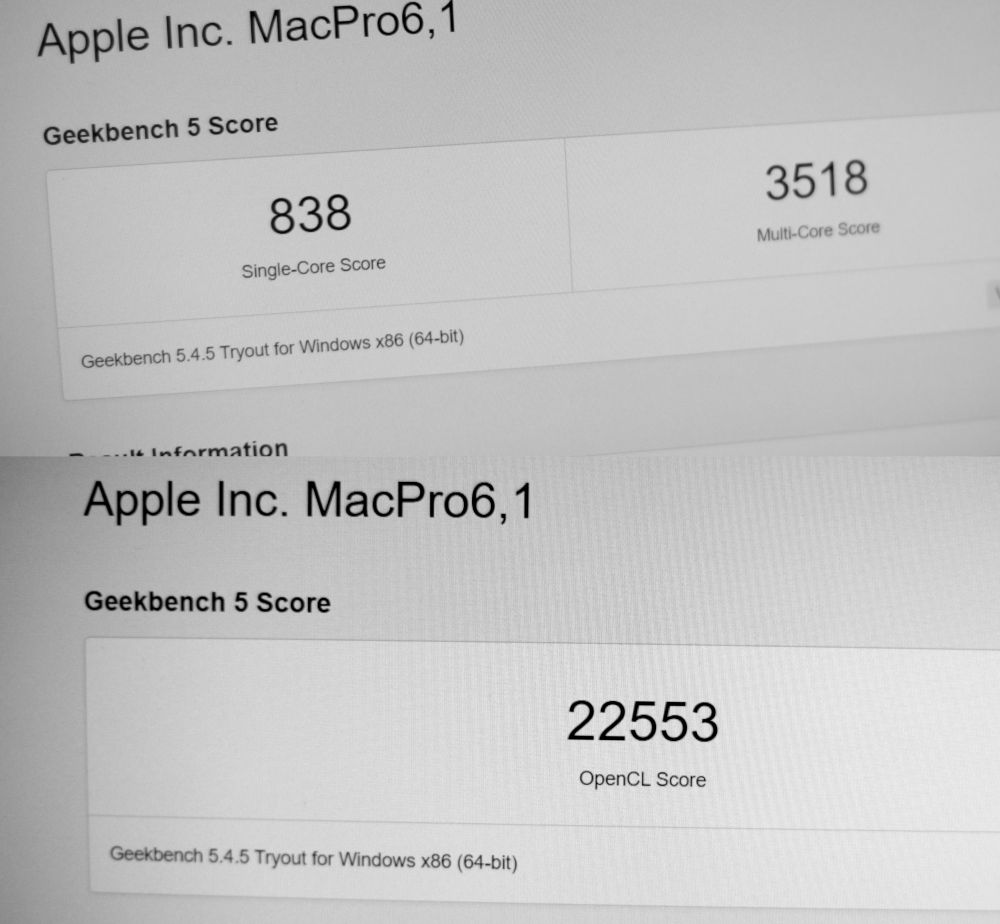

Sitting next to my 2012 Mac mini. For the record my dual-core 2012 2.5ghz i5 Mac mini has Geekbench scores of 655 (single-core) and 1409 (multi-core), vs 869 and 3509 for the 2013 Mac Pro.

2016. It was 2016. To make things worse the cards were of a non-standard size,

with a proprietary connector, and they were handed. One card also had a slot

for the system's SSD. The SSD slot was non-standard as well, a kind of

modified M.2. It strikes me that if the 2013 Mac Pro had a single, powerful,

normal-sized graphics card in one slot, and PCIe and SATA or regular M.2 ports

in the other slot, it would have made more sense.

The non-standard graphics cards had some knock-on side-effects. The cards

weren't available on the open market, and there were no third-party cards, so

if they failed replacements had to come from Apple. As of 2022 the only practical way to replace a failed card is by cannibalising another Mac Pro.

There were only ever three

different card options, D300 (2x2gb), D500 (2x3gb), and D700 (2x6gb). I'm not an expert on graphics cards, but from what I

have read the basic D300 model in my Mac Pro isn't very impressive. The 2gb of

memory is per-card, so although the system has a total of 4gb of graphics

memory that sum is split into two separate 2gb chunks, one of which spends most

of its time doing nothing. The D300 was prone to failure and was eventually

withdrawn, with Apple eliminating the lowest-specced Mac Pro from the range in

2015.

Look at this:

Look at it. It's my desktop PC. I built it

from parts in 2011. Most of this blog was made with it. The core is an

Asrock H67M-GE

motherboard. Initially the CPU was an Intel i5-2500K, a classic gaming chip

from the early 2010s. As of 2022 the motherboard is the same, but I've fitted

an SSD, a Geforce GTX 1650 graphics card, a more powerful power supply, more

memory, and a new processor, a Xeon 1275 v2. Even so the machine is no longer

bright or smooth. It can

just, just run Microsoft Flight Simulator 2020 at 1920x1080

acceptably, but that is the absolute limit of its grasp, the point at which its

waves break on the shore. It isn't compatible with Windows 11. It will never

get better; it will live until it dies, just like us.

How much did it all cost? The original components were about £500, and I

imagine I've spent the same again keeping it up-to-date, so over the course of a decade I've essentially spent £1,000 on it, £100 a year. For what was originally a pretty sweet PC that is still decently capable. In contrast my 2013 Mac Pro would have been £2,499 when it came out, in December 2013, although mine would have been even

more expensive because it has 16gb of memory and a 512gb SSD (the basic model

had 12gb and 256gb respectively). As of 2022 depreciation has eliminated around £2,000 from the initial purchase price.

My graphics card is an NVidia GTX 1650, a bog-standard 4gb card from 2019. It

has twice the memory and is more than twice as powerful as the AMD D300s in

the Mac Pro. On the surface it's an unfair comparison - the D300 is six years

older - but if the Mac Pro had been built to take standard parts I could have

upgraded the card with something better. I could in theory upgrade it with a pair of AMD

D700s, but they're more expensive on the used market than the Mac Pro, and

have limited resale value because they won't work in anything else.

While I'm moaning, the cards don't have heatsinks or fans. Instead the

graphics chip butts up against a pair of heatsinks built into the Mac Pro's

chassis, with a dab of thermal paste bridging the gap. The two cards are

cooled by the same air tunnel that cools the CPU and the rest of the

components, which puts me on edge. The components all generate different

amount of heat, and it seems to me that with a single thermal zone the cooling

will never be 100% efficient. It would be interesting to know which component

the fan takes it cue from, or if it averages them all out. I'm digressing

here.

What about electricity? My desktop PC uses 50w of power when it idles. That's with a

more advanced GPU, two SSDs, and a 3TB HDD. The whole thing uses 50w of power

when it idles and goes up to about 80w when running Civilisation V. I

think the highest I have seen was something like 150w when running the

aforementioned Flight Simulator or encoding video.

Flight Simulator is unusual in that it makes heavy use of CPU power.

Most games offload everything to the GPU, but Flight Simulator has to do a lot of complicated maths.

In contrast my 2013 Mac Pro idles at around 63w, although it ranges from 59-100w after it boots up. When running

Civilisation 5 it jumps up again to around 140w. I haven't tried to

edit masses of 4K video but I shudder to think what it must be like. In its

defence the Mac Pro is almost silent whereas my PC makes a constant whooshing

sound, but I can put up with that. I can be together for friendly dessert.

I don't have any way of formally benchmarking either system.

Civ 5 is fast and smooth on my PC, which is understandable given that

it's running on a graphics card that was released nine years after the game

came out. On my Mac Pro it's generally okay, but slightly jerky, and the

landscape doesn't redraw as quickly, I assume because the graphics card has

less memory. When I scroll somewhere it takes a split-second for the terrain to redraw. Imagine if that happened in real life! I would be a dead giveaway, wouldn't it? But perhaps it does happen and we just accept it.

Let's run Geekbench. The Mac Pro results for the CPU and GPU computation look like this, and note that

EveryMac's figures are 811 and 3234 respectively:

My PC looks like this:

If GeekBench is to be believed my PC's CPU is slightly slower than the Mac Pro, albeit only by

5-10%, but my graphics card is about 80% more powerful. I assume the gap would

be narrower if the two cards in the Mac Pro could be made to run simultaneously. Why are my

figures different from EveryMac and CPUBenchmark? I have no idea. Ambient temperature? Air pressure? Mascons?

Bootcamp

This prompted me to try out BootCamp, which is an official Apple utility

that lets you install Windows onto Macintosh hardware. I've never used it

before because I already have a Windows PC. Sadly Bootcamp is on its last

legs. It only works with Intel-powered Macintoshes, and the most up-to-date version

of Windows it supports is Windows 10, which will only be sold until January

2023 and supported until 2025. If you want to run Windows 11 on an Apple

Silicon Mac the only option, as far as I can tell, is to use a virtual

machine.

I put a 240gb SSD into an external USB 3.0 case, downloaded

a trial version of Windows 10 Home

- I just wanted to see if it works - and ran BootCamp. At which point I

realised that BootCamp won't install Windows onto an external drive.

BootCamp was released in 2006, a couple of years before USB 3.0, and I

wonder if it simply wasn't updated to reflect a newer generation of

super-speed interfaces.

In the end I followed the instructions in

the top answer here

at StackExchange, "

the internet answer site that actually has useful answers (tm)", by David Anderson (no relation). The process involves typing in a bunch of lengthy command lines with

forward and backward slashes, and you have to be careful not to torch the

wrong drive, but it went surprisingly smoothly, and before long I had

Windows running. Installing updates involves a lot of rebooting (BootCamp'ed

Windows doesn't seem to be able to reboot into itself) but that wasn't a big

problem.

What sorcery is this:

Intriguingly AMD has a Windows utility called CrossFireXtm that

will gang the two graphics cards together:

In Windows 10 Civilisation V ran at a steady 60fps without jerking or slowly

loading scenery tiles. It definitely performed better in Windows than in

MacOS, but I can't tell if that was because of better driver support, or

because the MacOS port is bad, or if it's CrossFireX, or even if CrossFireX

works or not.

By "talk" Bradford means "brainwash with electricity".

The cream of the world's armed forces in action. The Bootcamped-into-Windows 2013 Mac Pro runs XCOM: Enemy Within (2013) without any problems. EW was released for MacOS but it's 32-bit only, so it doesn't work with versions of MacOS later than 10.15 Catalina. XCOM 2 on the other hand is MacOS native.

Incidentally I was Holland. The first time I played Civ V I was Austria, and it was a walkover because Austria can capture city states by marrying them if you're friendly for five turns, which is easy. By the middle of the game I was swimming in cash. I had artillery long before anybody else, which is a guarantee of victory in Civ V. In comparison Holland's special ability is rubbish - something about retaining happiness if you trade away your luxury resources - and I never had the same dominant position, but I did manage to win. I can't remember why. I think it was just a time victory.

I should really have tried out Doom Eternal or something

similar, but it's a 60gb download and I'm wary of frying the machine. Does it run Crysis? Probably, but Crysis is difficult to get working even on modern Windows PCs. If

nothing else however a 2013 Mac Pro will run Civilisation V and XCOM: Enemy Within well

with Bootcamp. With MacOS Monterey I have twelve Steam games, with Windows

10 I have many more:

I decided to run GeekBench under Windows to see if CrossFire would affect

the computational scores, but it doesn't:

The numbers aren't identical but the differences are tiny. I was pleasantly surprised at how well Bootcamp worked. Boot Camp. It's Boot Camp. Two

separate words. Windows 10 worked fine running from a USB 3.0 external SSD.

If Boot Camp was easier to set up, or could be automated, the 2013 Mac Pro might

be a perfectly decent cheap second-hand mid-2010s Windows gaming machine albeit with

weak graphics hardware that you can't upgrade. But unlike a PC it's not

a fragile box of bits held together with little screws and gaffer tape.

Let's talk about the Mac Pro again. On paper it's highly repairable.

iFixIt gave the machine a good score. It can be stripped fairly easily to its components using just a couple of

security screwdrivers. But almost all of the components are proprietary

parts only available from Apple, so although the machine is repairable it

isn't cost-effective to do so. If one of the GPUs fails a replacement isn't

much cheaper than an entire Mac Pro. The only source of replacement power

supplies is from cannibalised Mac Pros. Ditto the fan, I/O boards, the case

itself.

As a consequence the Mac Pro feels like those Star Wars LEGO kits where the

bricks are specially-made and only fit one way. You can take the kit apart,

but all you can do at that point is put the bricks back together again exactly as

they were. The big exceptions are the RAM and the CPU. The 2013 Mac Pro takes a maximum of 128gb if you're really keen. Replacing or upgrading

the CPU involves stripping the machine down completely, but beyond that the motherboard has no problem accepting higher-clocked, multi-core Xeons. I suggest downloading

Macs Fan Control if you do that, and setting it up to run the fans at a higher speed than the built-in firmware.

Apple lost interest in the 2013 Mac Pro long before it

was discontinued. It's easy to describe the range because there was only one generation. There were four models, ranging from 3.7ghz/four-core to 2.7ghz/twelve-core, but apart from the CPU and GPU the rest of the hardware was the same. The general consensus in 2013 was that the six-core, 3.5ghz model was the most

cost-effective. The machines can apparently be upgraded with any contemporary Ivy Bridge-based Xeon processor. As of this writing the ten-core E5-2690 is a popular choice because it doesn't suffer from "eBay Apple tax".

In 2017 Apple discontinued the 3.7ghz quad-core budget model and cut the price of

the other configurations, and in June of that year the company announced that they

were

working on a "completely redesigned" successor, which was an unusual admission. Apple normally shrouds its

forthcoming products in secrecy. Despite essentially admitting that the 2013 Mac Pro was a

dead duck Apple continued to sell the machine until December 2019, when it was

replaced by a new model housed in a metal case that resembled the old Mac Pro

of yesteryear. And that is the whole history of the 2013 Mac Pro, right there, in two paragraphs.

The new Mac Pro has also been controversial, mainly for its high price. I'm

old enough to remember when DEC Alpha and Silicon Graphics workstations cost £12,000, and it's not difficult to specify a Mac Pro for that kind of money.

One of the memory upgrade options, for a ludicrous 768gb of RAM, is £13,700 by

itself. The optional wheels are £400. The accompanying 32" 6K Pro Display

costs £4,599, but that doesn't include the optional stand, which is £949.

DEC and SGI workstations justified their high price because nothing else could touch them at the time, but the modern Mac Pro just feels overpriced. On the positive side it's built to an extremely high standard, but with the looming move to Apple Silicon I suspect that the finely-engineered aluminium case will probably age better than the innards. This is something it shares with the Power Mac G5; I bought my G5 purely for the awesome case before I decided to actually use it as a computer.

The Mac Pro name may not be around forever. In late 2020 Apple introduced

Apple Silicon, a completely new CPU architecture derived from the same kind of ARM chips that are used in the iPad and iPhone. As of this

writing Apple hasn't released a professional-level Silicon machine, but the M1

and M2 Mac minis and MacBooks are strong performers that out-benchmark the 2019 Mac

Pro in all but multi-core tasks. Furthermore in 2022 Apple released the M1-powered Mac

Studio, which is conceptually similar to the 2013 Mac Pro and fills a very

similar niche. Only time will tell if the Mac Pro name and design philosophy

will continue with Apple Silicon internals or if it will fade into history.

After all that, what's the Mac Pro like? I've had a chance to research and

write this blog post and play a bit of Civilisation V. I can confirm

that the 2013 Mac Pro will surf the internet. It runs MacOS Monterey, which as

of this writing is the previous version of MacOS, although its successor was

only released a few days ago. Why did I buy one? Here's that picture of my Mac

mini again:

The mini is a 2012 model. Performance-wise it's more than enough for music and most other

tasks, and it has a FireWire port, which is why I bought it, so that I could

use my old MOTU 828 audio interface. Unfortunately it tops out at MacOS 10.15

Catalina.

It can be patched to run MacOS 11 Big Sur, but the results aren't pretty. It works, but it's very slow, presumably

because the mini's integrated graphics chipset is really naff. When I tried it

Civilisation V was unplayably slow even with the graphics options

turned down. The CPU heated up to 90c, as if the game was using software

rendering.

In contrast the 2013 Mac Pro runs Civ V well, albeit not perfectly, and it

opens up Logic in a flash. MacOS Monterey will presumably be supported for

several years, and Chrome for many years after that. Can it be patched to run

MacOS Ventura? Probably, although Ventura has only been out for a few days, so

I imagine DosDude and GitHub's Barrykn etc are working on it. There will however come a

point when MacOS drops support for Intel processors, which probably won't be

that far in the future, even though Apple still sells a couple of

Intel Macintoshes brand new (a mini, and the 2019 Pro).

Do you remember when reviews of OS/2 Warp in PC Shopper or whatever had

screenshots of the desktop with a bunch of random windows open just to show

that you could put one window on top of another? This is Monterey:

It does multimedia. Historically, a handful of PowerPC machines were released after the decision to switch processors had already made them obsolete, notably the Power Mac G5 Quad. OS X continued to support the architecture for four years, and the Quad remained viable for a few years after that, but my hunch is that Apple will switch to Silicon faster and more thoroughly than it switched to Intel, with owners of the last Intel Mac Pros probably getting very annoyed in the process.

In Summary

When it was new the 2013 Mac Pro was a really

hard sell. In six years of production it never found a niche, although

as Vice magazine pointed out

at least one data centre bought thousands of them to use as rackmounted

servers (the machine seems to work just as well on its side). As of 2022 it's an interesting alternative to a Mac mini as a home media centre

or general desktop computer, and it runs Windows 10 spiffingly with Boot Camp. It may not have been particularly great at

encoding 4K video, but it has no problem playing it back. It supports

three 5K displays, so with a sufficiently long set of Thunderbolt 2.0 cables

you could play a film on one monitor while surfing the internet on another,

with a third just for show.

A mini uses less power and is thus cheaper to run, but even the most powerful Intel mini is hobbled by built-in Intel HD graphics, which even in its 2018 incarnation is weaker and supports fewer monitors than the D300 GPUs in the base 2013 Mac Pro. There is of course the question of long-term reliability, but I don't envisage using my Mac Pro to edit 4K video. It will lead a comfortable life until, many years from now, I will repurpose the metal case as a plant pot or miniature umbrella holder, the end.